NeurIPS 2025: What Actually Matters

The papers that hint at the future of Vertical AI in 2026+

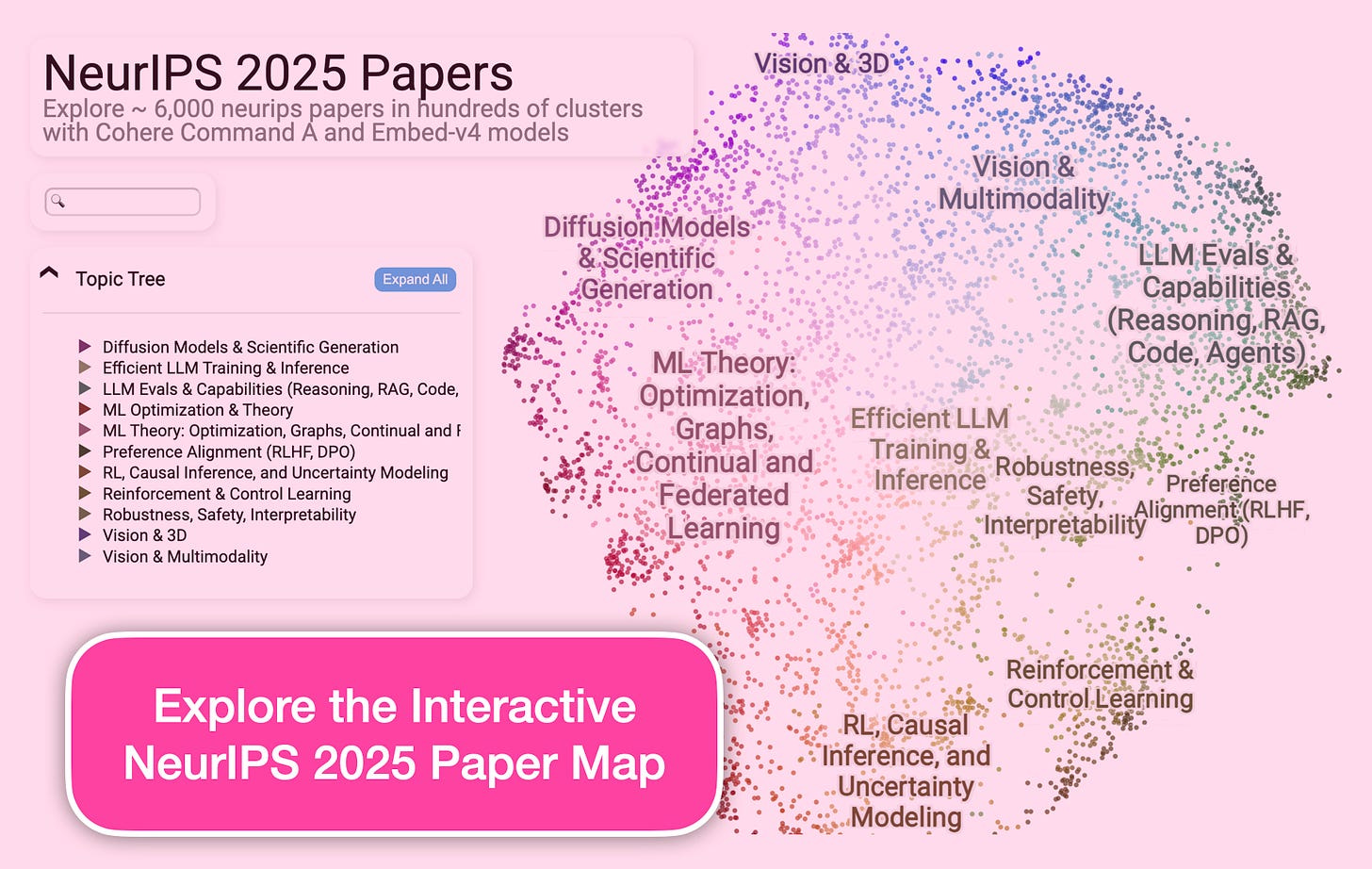

Earlier this month, the annual NeurIPS conference brought together tens of thousands of attendees in San Diego & Mexico City, reviewing and expounding on 20k+ research papers, giving the rest of us a flavor for the course of AI in the coming year.

The conference itself has changed along with the AI boom. No longer a niche academic gathering dominated by grad students, NeurIPS has completed its transition into a full-blown industry trade show. While not CES-level just yet, this year’s gathering was replete with big booths, commercial product launches, and quiet signaling (i.e. marketing) from the world’s largest model builders.

That’s probably natural given the importance and scale of the AI industry. A strange undercurrent was also the inherent problem of information discovery that comes with ballooning attention and scale. Attendees discussed the unavoidable fact that, even amongst the 5k accepted papers, many were clearly written with AI assistance. Then there was the challenge of filtering down from 20k submissions, to 5k acceptances, to just a handful of awarded research papers. What percentage of papers in NeurIPS 2026 be written, reviewed, and awarded by (at least in part) the very innovation the conference was convened to examine? Part concerning, part exciting.

In any case, at Euclid, we are far fewer in number—and almost certainly less neuronally gifted—than the NeurIPS selection committee. But we do think the developments they chose to highlight are an important barometer for the pulse and future of Vertical AI.

In this essay, we attempt to summarize the top five papers (all but one of those awarded). Hopefully, they serve as a helpful for guide for fellow lowly investors and operators also seeking understanding of the latest in AI research. Naturally, our main focus is to provide Vertical AI founders with relevant takeaways and implications as they think about where innovation is trending in 2026.

The Big Picture

Stepping back, here are three meta-themes from NeurIPS 2025:

Efficiency is in vogue: seeking gains from better plumbing, not bigger models.

Models are converging: differentiation may be moving up the stack.

Scaling laws are spreading beyond language: reinforcement learning is back.

With that framing, here are the five papers worth caring about.

1. Artificial Hivemind: The Open-Ended Homogeneity of Language Models

Link to the Full Paper | Takeaway: LLMs converge on the same ideas.

This paper formalizes something many practitioners have felt intuitively:

when you ask today’s frontier models open-ended questions, they increasingly sound like different skins on the same brain.

The authors introduce Infinity-Chat, a benchmark of 26,000 real, open-ended prompts with 31,000 human annotations. Across models and vendors, they observe striking convergence in phrasing, structure, and even values—especially on creative or judgment-based tasks.

This isn’t just about creativity. It’s about behavioral convergence. The models are collapsing into a shared “behavioral basin,” where vendor choice matters less than it used to.

Why this matters

If all major models converge on an averaged-out worldview, any bias or blind spot propagates everywhere at once. Alignment and safety objectives—while necessary—may be quietly trading away diversity, local judgment, and minority preferences.

Implication for Vertical AI

Vertical software lives on context. What’s “right” in dentistry, construction lending, logistics, or insurance is rarely consensus-driven. This paper is a warning: if your vertical agent isn’t explicitly evaluated for domain-specific variation, it may just be echoing the global hive mind. So your “model-picking edge” may be irrelevant.

Differentiation increasingly comes from data, evaluation, and incentives, not which model you choose at which time.

2. Gated Attention for Large Language Models

Link to the Full Paper | Takeaway: Better attention without more compute.

This paper introduces a deceptively simple architectural change:

add a sigmoid gate after each attention head, effectively giving the model a dimmer switch to control how much each head influences the output.

Across ~30 large-scale experiments—including trillion-token regimes—the change improves training stability, long-context behavior, and overall performance. It also fixes a known pathology where early tokens dominate attention (“attention sinks”).

Importantly, this is not about scaling up. It’s about cleaner plumbing.

Why this matters

These are the kinds of changes that don’t make headlines—but six months later, you notice that models of the same size are cheaper, more stable, and hallucinate less. This is efficiency via design, not brute force.

Implication for Vertical AI

Vertical workflows are dominated by long, messy inputs: EMR exports, contracts, claims histories, transaction logs, underwriting packages, etc. Anything that improves long-context reliability directly upgrades product quality—without exploding token costs—is a huge win.

App-layer operators won’t be implementing gated attention themselves. But you’ll benefit from it as it’s (inevitably) adopted by models, and that may drive significant improvements to Vertical AI workflows.

3. Does Reinforcement Learning Actually Create Better Reasoning?

Link to the Full Paper | Takeaway: RL sharpens, doesn’t invent reasoning.

This paper delivers a much-needed reality check on Reinforcement Learning (RL)-based fine-tuning (RLHF, RLVR). The authors show that while RL improves sampling efficiency—helping models pick good answers more often—it does not create fundamentally new reasoning capabilities.

Those reasoning paths were already present in the base model. RL simply re-weights them. New reasoning patterns (i.e. creativity) are introduced, rather, by distillation.

Why this matters

This punctures a common industry narrative: that we can keep unlocking smarter models just by piling on more RL. Today’s RL techniques mostly polish existing intelligence rather than expand it.

Implication for Vertical AI

If your strategy is “we’ll RL-fine-tune a base model to become a domain expert,” this paper is a constraint you should internalize. RL remains great for reliability, consistency, and performance optimization.

Domain intelligence will still comes from base model choice (and its reasoning / memory capacity), data, tooling, and workflow integration.

4. Why Diffusion Models Don’t Memorize

Link to the Full Paper | Takeaway: Diffusion generalizes before memorizing.

This theory paper tackles a heated debate: are diffusion models just memorizing their training data?

The authors show diffusion training has two phases: (1) Learning: An early phase where the model learns to generate high-quality, diverse samples; and (2) Memorization: A later phase where training data is committed wholesale as the model begins to overfit to hyper-specific inputs.

The crucial finding here is that, as dataset size grows, the memorization phase gets pushed further out. This creates a widening safe training window (e.g. a longer period in which the model can beef up without risking memorization / overfitting that could equate to IP-theft).

Why this matters

This reframes IP and privacy debates. It gives a credible counter-argument against “diffusion is inherently theft.” Training choices allow AI engineers to hit pause before learning turns to rote memorization. So the bar can become: how much data did you use, how long did you train, did you stop before overfitting, can the model regurgitate our IP?

Implication for Vertical AI

Verticals using generative vision or signal models—radiology, pathology, industrial inspection—need principled ways to argue generalization over memorization. This paper provides that foundation.

This doesn’t eliminate data privacy risk, but it makes the discussion more objective and testable—and easier access to 1st-party data has clear value in Vertical AI.

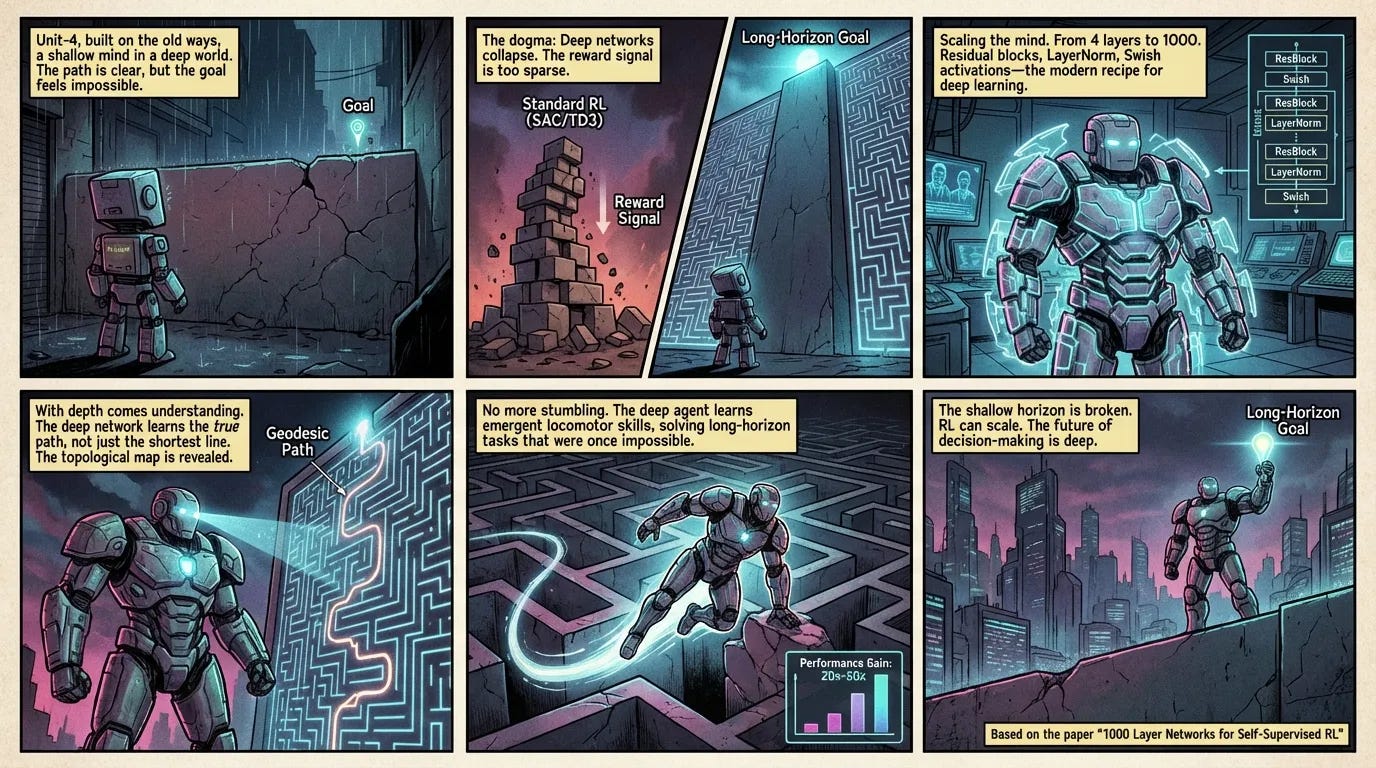

5. 1000-Layer Networks for Self-Supervised RL

Link to the Full Paper | Takeaway: Deep RL finally scales.

Historically, reinforcement learning (RL) relied on shallow networks (2–5 layers) because researchers assumed RL didn’t generate enough signal to justify depth.

This paper blows that assumption up.

By training hundreds-to-thousands-layer networks in self-supervised, goal-conditioned environments, the authors achieve 2–50× performance improvements on control tasks.

Once researchers stopped being stingy with depth and compute, RL started behaving like language and vision did a few years ago: power-law scale works.

Why this matters

It dramatically raises the ceiling for agentic systems. While household robots aren’t here yet, the foundational work that enables them is arriving.

Implication for Vertical AI

This likely matters most imminently for physical AI—think robotics in ops-heavy verticals (warehouses, logistics, healthcare, industrial automation)—where AI won’t just recommend actions but execute them.

Beyond robotics, these RL findings should also extend to any in-situ vertical learning in which a task (rather than reasoning itself) needs to be optimized.

The questions to ask in 2026

Our single takeaway from NeurIPS 2025 was that if—as a founder or investor—you’re still just asking “what model are we using?”, you’re probably asking the wrong question. Or at least there are others you should be asking in tandem.

Better questions might be:

Can this model reason reliably & leverage tooling to do so?

How does it learn from the workflow it claims to serve, and recall learnings?

Does it learn, or memorize—and what does this mean for privacy & IP risks?

How can we granularly measure reasoning, learning, memory, and privacy?

Can the model run efficiently, consider where the user is & what they will pay?

Alongside the number of questions and opportunities in Vertical AI, we estimate the number of research submissions to NeurIPS will only grow in 2026. So beyond these practical questions, we’re quite curious to see how the greatest minds in the space will leverage AI to solve their own problem.

Perhaps next year, humans won’t choose the winners at all.

Thanks for reading Euclid Insights! If you’re building in Vertical AI and thinking about how the latest developments can accelerate your idea, we would love to trade notes. Reach out via LinkedIn or email.