Deus Ex CapEx

Examining the assumptions and incentives behind the economy-wide bet on GPUs

Two years ago, Sequoia’s David Cahn wrote a piece introducing what he called AI’s $200B Problem. His main takeaway was that there was a significant gap between the capital being invested in AI infrastructure and the resulting revenues generated by those investments. He quantified it by estimating the lifetime revenue required to repay the upfront capital expenditures (CapEx) in GPUs, data centers, and supporting infrastructure. At the time, the mismatch totaled roughly $200B. Cahn explains the math and reasoning behind his calculation:

Consider the following: For every $1 spent on a GPU, roughly $1 needs to be spent on energy costs to run the GPU in a data center. So if Nvidia sells $50B in run-rate GPU revenue by the end of the year (a conservative estimate based on analyst forecasts), that implies approximately $100B in data center expenditures. The end user of the GPU—for example, Starbucks, X, Tesla, Github Copilot or a new startup—needs to earn a margin too. Let’s assume they need to earn a 50% margin. This implies that for each year of current GPU CapEx, $200B of lifetime revenue would need to be generated by these GPUs to pay back the upfront capital investment.

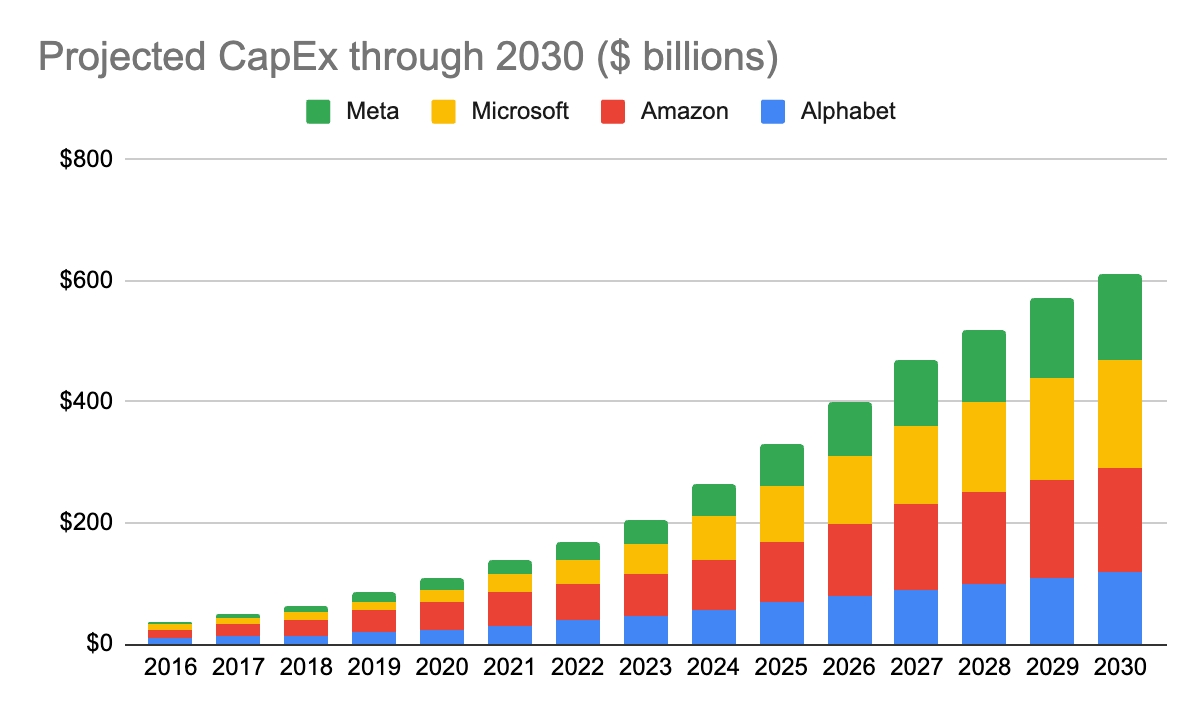

Cahn’s framing resonated because it crystallized something many already suspected: capital spending was outpacing monetization. Hyper-scalers and AI-native firms are investing billions in GPUs, expecting their revenues to scale in lockstep with these investments. Since Cahn first published his piece, the AI revenue problem has grown threefold: the $200B figure has become AI’s $600B question, and it’s not stopping there. We extended Cahn’s initial analysis to 2025 and 2026, projecting data center spending points to an AI payback number approaching $1T next year.

For Cahn’s analysis on revenue, he assumed that Google, Microsoft, Apple, and Meta can generate $10 billion annually from new AI-related revenue, plus $5 billion in new AI revenue for each of Oracle, ByteDance, Alibaba, Tencent, X, and Tesla. An analysis from The Information estimated that AI-native companies are currently at a $18.5B run rate. Let’s round up to $20B for simplicity.

Added all together, that’s $90B of new AI-related revenue, generously. So, for 2025, that means there’s a shortfall of roughly $780B in AI revenue that needs to be filled for each year of CapEx at this level. To reiterate, this effectively amounts to a trillion dollars in net-new recurring revenue, as the shortfall is only expected to grow in 2026. It's worth remembering that total global spending on cloud infrastructure and applications is expected to be approximately $500B this year. The good news is that AI revenue is growing at an incredible pace, albeit with uncertainties around retention and a long way to go. The bad news is that the CapEx explosion does not appear to be slowing down.

The AI Data Center Spending Bonanza

Renaissance Macro Research analyst estimates suggest that, so far in 2025, AI data center spending has contributed more to GDP growth than all US consumer spending combined—marking a historic first. Another remarkable statistic: spending on data center construction, excluding the cost of all the technology inside the data center, is expected to surpass spending on office building construction this year.

Microsoft, Google, Amazon, and Meta alone are forecasting a record $364 billion of capital expenditures for 2025. That represents nearly 50% of their projected revenue for 2025, while also drastically eating into their FCF. As the WSJ reports:

From 2016 through 2023, free cash flow and net earnings of Alphabet, Amazon, Meta and Microsoft grew roughly in tandem. But since 2023, the two have diverged. The four companies’ combined net income is up 73%, to $91 billion, in the second quarter from two years earlier, while free cash flow is down 30% to $40 billion, according to FactSet data

In aggregate, an analysis by Morgan Stanley suggests that total spending on AI data centers could approach $3 trillion by 2030. With these figures in mind, our AI revenue payback estimates might even be too generous. One analyst noted that hyper-scalers would need to double current revenues from their data centers just to cover the annual depreciation costs of their infrastructure build-out. And that’s for one year of spending with a 10-year depreciation schedule.

If these economics persisted in isolation, we might expect an eventual slowdown in capital deployment. Instead, a debt financing bonanza is helping to fuel the current cycle, with private credit markets emerging as a significant accelerator for data center buildouts. Recent reports suggest that less than half of the cash for the data center build-outs is expected to come from the hyperscalers. The remaining amount will be raised from more traditional investors, with private credit expected to fund the most significant portion. UBS estimates $50B in quarterly flows into the private credit markets alone, even before accounting for rumored $29B mega-deals from Meta and others.

Beyond traditional debt, new data centers are being financed through structured products, such as commercial mortgage-backed securities (CMBS). The volume of CMBS tied to AI infrastructure is growing 30% year-over-year, reaching $15.6B in 2024. A recent report suggested that roughly half of the year-to-date issuance for data centers has been through CMBS. This surge should be a worrying manifestation for anyone who lived through the GFC—especially when paired with an underlying asset that could be significantly more volatile.

Given the rapid advancement in GPUs themselves, it’s even unclear how financiers are assigning recovery value to such assets. CoreWeave assigns a 6-year life to its fleet. That may not seem so far from estimates like Massed Compute’s here, which suggest that most high-end server-grade chips lose 20-30% of their value after the first year, with depreciation declining to 10-15% for subsequent years. However, innovation cycles from suppliers like Nvidia are also ramping up. Jensen Huang recently joked that post-Blackwell, “you couldn’t give Hoppers away.” Logically, obsolescence timelines for cutting-edge chips align with the ephemeral “frontier lifetimes” of the foundation models that rely on them—these have trended down to 6-15 months.1 Yet CoreWeave aside, since 2023, Microsoft, Meta, Amazon, Oracle, and Alphabet all increased their depreciation timelines to 5.5-6 years.2 One has to question the incentives at play here: according to an analysis by The Economist, right-sizing to a depreciation cycle of three years would shave $780 billion off the collective enterprise value of the “AI Big 5.”

From the Erie Canal to Stargate

They say history rhymes. In the case of the ongoing AI data center boom, it’s hard to avoid seeing repeating patterns from past infrastructure bubbles. Many have noted the similarities to Telecoms, which, in the late 1990s, financed massive fiber build-outs on the assumption of exponential traffic growth.

The nascent internet boom had investors throwing cash at startup web companies and broadband telecommunications carriers. They were right the internet would drive a productivity boom, but wrong about the financial payoff. Many of those companies couldn’t earn enough to cover their expenses and went bust. In broadband, excess capacity caused pricing to plunge. The resulting slump in capital spending helped cause a mild recession in 2001.

Similarities abound in past manias around railways and canals. During a recent interview, Zuckerberg even directly acknowledged the parallels. The question is whether the same hangovers are inevitable: revenues lagging inflated expectations, defaults spiking, and assets eventually repriced at pennies on the dollar.

The difference today is scale: where fiber was a hundred-billion-dollar problem, AI capital expenditures are on track to be measured in trillions. All of these analogies are fascinating in that the bets were fundamentally correct, from a technology perspective. But expectations and capital expenditures were too much, too soon. So, overzealous financiers and retail investors lost their shirts—but in the long term, the economy benefited from the breakneck adoption of a key infrastructural enabler.

If GPU capacity is overbuilt today, some will lose out. We think, however, it’s almost certainly a long-term positive for the startup ecosystem and economy. Like many infrastructure manias before it, the expansion of Internet infrastructure provided the necessary conditions for numerous transformational innovators (and venture-backed winners) to emerge. In the short term, however, things could get ugly.

For now, investors are pricing big tech as if their asset-heavy business will be as profitable as their asset-light models. So far, “we don’t have any evidence of that,” said Jason Thomas, head of research at Carlyle Group. “The variable people miss out on is the time horizon. All this capital spending may prove productive beyond their wildest dreams, but beyond the relevant time horizon for their shareholders.”

We’re in an interesting situation where the power players—big investors, the Mag7, the chipmakers—all benefit from the cash spigot staying on. Just last week, reports emerged that OpenAI plans to spend around $100B on renting servers from cloud providers over the next five years. This is in addition to the $350B they had already forecast to spend on compute through 2030. The net result is that, if these rumors are true, OpenAI’s annual outlay on data centers would reach $85B. That figure is equal to the total revenue that Oracle and Salesforce did last year combined.

Just this week, moreover, Nvidia announced it’d be investing $100B into OpenAI to “help build and deploy at last 10 gigawatts of Nvidia systems” for its AI data centers. Nvidia has announced smaller, but equally curious deals with Lambda and CoreWeave. While Nvidia has largely framed these deals as critical for customer diversification, we can’t help but notice the similarities with the telecom and equipment providers during the dot-com era, where such deals were all the rage. Lucent and Nortel were infamous for “vendor financing,” where they’d take equity stakes and/or investment in exchange for telcos buying their equipment. Perhaps the most egregious was the practice of “round-trip revenue” booked by companies such as Global Crossing and WorldCom, which involved capacity swap deals with other backbone providers and artificially inflated revenue. The OpenAI deal appears to be structured as a (presumably non-binding) Letter of Intent.

Concurrently, Oracle announced it had secured contracts worth hundreds of billions of dollars to deliver cloud computing services to OpenAI and several other major clients. This news caused its stock to spike 30% (a bewildering number for a half-trillion dollar company) and elevated co-founder Larry Ellison into contention for the world’s wealthiest individual. Debt-rating firm Moody’s—amongst other analysts—wondered how OpenAI would procure the cash to cover the contracts. Completing the cycle of insider dealing, then, is Nvidia’s aforementioned $100B promise.

Similar dynamics have played out in past infra bubbles: front-loading growth, masking potential demand weakness, and utilizing interlocking chains of investments, financings & promises to build hype and valuation. The levels of obfuscation today are nowhere near as blatant as in the heights of the dot-com era—but some dynamics we’re seeing are head-turning. It appears that much of the value assigned to future data center demand, for example, is predicated not on actual bookings, but rather “options” to buy capacity. As mentioned, some of these big deals, which are driving stock prices through the roof, are no more than glorified handshakes. Should demand hit a road bump, who is holding the bag? Certainly, the least-informed retail investors, as well as those who own physical assets like data centers, and their debt providers.

Overall, investment exuberance in infrastructure—with its positive externalities for businesses—should be a massive boon for hosting economies, generally outweighing preceding manias. But some elements of the current cycle are more precarious. The Mag7—which represents a third of the S&P 500 today—has significant income statement exposure to infrastructure depreciation, which oversupply can negatively impact. It’s also worth noting that—while CoreWeave et al in many ways look like REITs—their underlying assets are valued very differently than real estate. While outdated chips are still useful, they have nowhere near the productive lifespan of traditional infrastructure, such as bridges or power lines. Compared to what OpenAI has allocated for its Stargate data center project, the Erie Canal cost half that amount in today’s dollars, recovered its costs in about 10 years, and still generates billions in annual revenue exactly two centuries later.

The Mirage of Cheaper AI

There’s a secondary aspect to this data center spending bonanza that is deeply tied to the startup ecosystem, and that we believe many are overlooking: the assumption that AI is getting cheaper. Increasing investments in infrastructure, combined with advancements at the foundational model level, would drive prices down.

On the surface, some numbers look encouraging. Entry-level models like GPT-5 Nano now cost around 10 cents per million tokens: orders of magnitude cheaper than full GPT-5, which runs $3.40 per million tokens. It’s tempting to read this as proof that Moore’s-law-style efficiency is driving AI toward sustainable economics. But cheaper inputs have not translated into lower system-level costs. In fact, the opposite is happening.

The rise of reasoning-intensive AI—where models re-run queries, scour the web, or spin up mini-programs to validate results—has expanded compute demand per task. AI agents chain actions together, sometimes working for minutes or hours. Despite up to 90% declines in cost per token, ballooning token requirements can result in a 10x increase in total cost. A Wall Street Journal analysis of task complexity illustrates this vividly:

As models get “smarter,” they also get hungrier. We already see these dynamics quite clearly in startup financials. Notion’s Ivan Zhao has been open about these challenges: two years ago, the company had typical SaaS margins of about 90%. Now, roughly 10 percentage points of that profit are spent with model providers, fueling Notion’s latest AI features. This is by no means catastrophic for a business like Notion, but it illustrates a broader trend.

Cursor, a code-focused AI assistant, reportedly spends an amount nearly equal to its revenue on Anthropic, resulting in a gross margin that is zero or even negative. Another sign of the rampant cross-dealing happening in the AI world right now, Cursor is both Anthropic’s biggest customer and a direct competitor.

According to reports from the Information, Perplexity, the popular search startup, spent 164% of its 2024 revenue to cover bills from AWS, Anthropic, and OpenAI. As Replit reportedly grew from $2M in August 2024 to $144M in July 2025, the costs of licensing the AI models that drive its agents have resulted in volatile gross margins, ranging from negative 14% to as high as 36% this year. Another competitor, Loveable, reportedly has gross margins around 35%. Lovable CEO Anton Osika expects the company to see improved margins as model costs decrease, adding that the cost of AI “could be multiple times cheaper in three years,” he said. Three years is an eternity in modern AI, after all—but it’s by no means a clear-cut conclusion.

Aaron Levie at Box frames this argument from the perspective of Javon’s paradox.

The key point, thus, is not that "AI is getting more expensive"; instead, it's that because it's getting cheaper and more capable, we're using more of it to solve problems better.

AI will both simultaneously always be getting cheaper, and more expensive.

We are sympathetic to this argument: if competition and economies of scale drive down per-token inference costs, yet apps continue to take on higher-complexity tasks to expand their performance and capabilities (in turn increasing their token usage), AI is becoming both cheaper and more expensive at the same time.

There is, of course, an alternative view that the model and cloud providers will look to capture more dollars, not less, to recoup their significant capital investments. In other words, the margin improvements resulting from the declining cost of tokens would be increasingly offset by the need of model companies, cloud providers, and chip-makers to recoup their substantial capital investments. We imagine, given the magnitude of the opportunity, OpenAI et al will play this very carefully.

There may be clues to future monetization in how OpenAI has evolved API pricing to date: for example, the increasing ratio of output/input CPMT (Cost per Million Tokens) might suggest they want to tax the most complex use cases (which likely have meatier use cases, and perhaps bigger balance sheets), without discouraging smaller-scale usage. We can also look to players who have more to prove and are hence more aggressive on monetization experimentation for hints: Perplexity’s testing of ads and commerce hasn’t gained much momentum as a path to AI revenue just yet.

The concern for startups is that they fall into a “structural trap” of sorts: as inference costs continue to decline, workloads (and token usage) continue to expand. Cheaper tokens are irrelevant if every “smarter” task requires an order of magnitude more of them. The result could be a continual margin squeeze to stay ahead of the competition. Apps that need access to the best models to retain customers may not see AI costs decrease as expected, with software-like margins often being a mirage.

We thought this perspective from Newcomer illustrated well the unique situation we find ourselves in as a new software infrastructure layer grows up:

Money-losing businesses are of course endemic to Silicon Valley… What’s a little less common is building a money losing business on top of another money losing business. Typically the platforms themselves aren’t also money pits, but that’s not the case in the AI moment. Cursor building on top of Anthropic is like DoorDash building on top of Uber, one investor put it.

The combination of the current unprofitability of both the infrastructure (AI data centers) and platform (foundation models) layers is a worrying one. Of course, many of the greatest stories in technological innovation required early creativity around margins. Uber, DoorDash, Netflix, Amazon—as the founder of the latter famously said, “your margin is my opportunity.” The reality is that the shift from on-premise to cloud software cost about 10 points of gross margin in perpetuity. If the change to AI-native costs another 10—and our assumptions regarding the TAM-expanding potential of AI are even directionally the case—that is likely a price worth paying.

AI-native software businesses—at least those in the vertical ecosystems we invest in at Euclid—don’t need to provide every whiz-bang functionality a model can offer. They need to solve real problems for their customers. Given how quickly commodity models catch up to frontier peers, we believe that this implies a substantial likelihood of decline in Vertical AI's cost-per-task over time. As always, companies that obsess over efficiency, own their unit economics, invest in closed-loop improvement, and build in vertical or high-ARPU customer segments where there is defensibility to be had, are well-positioned.

The Cost of Apotheosis

Founders at Google, Microsoft, and Meta have been asked about these spending levels recently. Their responses are certainly illuminating about their thinking. Gavin Baker, CIO at Atreides Management, shared his view on a recent podcast:

Mark Zuckerberg, Satya and Sundar just told you in different ways, we are not even thinking about ROI. And the reason they said that is because the people who actually control these companies, the founders, there's either super-voting stock or significant influence in the case of Microsoft, believe they’re in a race to create a Digital God.

Gavin later adds that “Larry Page has evidently said internally at Google many times, ‘I am willing to go bankrupt rather than lose this race.’” When asked directly, Zuckerberg has effectively confirmed a similar, if more sanitized, disposition:

If we end up misspending a couple of hundred billion dollars, I think that that is going to be very unfortunate obviously. But what I'd say is I actually think the risk is higher on the other side. If you if you build too slowly and then super intelligence is possible in 3 years, but you built it out assuming it would be there in 5 years, then you're just out of position on what I think is going to be the most important technology [in history].

This logic underpins much of today’s capital inflows: investors are betting that even if today’s spending looks unsustainable, the eventual size of the pie will more than compensate for early losses. What level of spending can’t be justified by the pursuit of a “digital God?” But history urges caution. Infrastructure bubbles rarely resolve cleanly. We’re now at a stage where half of GDP growth is driven by infrastructure spending on AI. Telecom’s overbuild required bankruptcies and asset fire sales. Canals in the 18th century and railroads in the 19th century followed a similar arc. When capital intensity runs this far ahead of revenues, time alone is rarely the cure.

When David Cahn wrote about AI’s $200B problem in 2023, it was a yellow flag. Two years later, the mismatch has tripled (soon to be 5x), financing has become even more exotic, and the payback math has become even more challenging.

We can’t help but feel there is an uncanny symmetry between hyperscaler logic and the hyper-aggregation mindset of VC platform funds today. We wrote about the mega-fund perspective a few weeks ago:

Returns aside, there is a more obvious and immediate effect: the concentration of capital in “asset managers” requires a corresponding concentration of capital in companies. You can’t raise your next fund if you can’t deploy the first. Tiger Global’s rise and fall demonstrated the pitfalls of the high-velocity, low-touch investment model. The only other option available—and frankly an easier one, given sourcing and underwriting don’t scale linearly with check size—is to concentrate capital in specific assets. What safer place to do it than in perceived winners in a market you can argue is winner-takes-all?

There is a primitive psychology behind it all. If you are a scale player in a market wherein the power law is the consensus view and the opportunity can be portrayed as winner-takes-all, there is really one rational strategy: spend as much as you can to win, regardless of cost or ROI. Of course, this logic is both circular and self-perpetuating: it’s a narrative manufactured to drive public perception and attract capital with little basis in fact. It seems, however, that this perception is now the prevailing narrative. No scale of capital, valuations, burn, or AUM is too high in the pursuit of a digital god!

As we’ve discussed in prior essays, this thinking poses systemic risks to the venture capital industry. For every billion-dollar AI investment that justifies its price, there will be dozens that do not. The danger is not that AI won’t transform industries—it is, and will—but rather that capital allocators mistake the inevitability of impact for the inevitability of returns. Or, for that matter, mistaking scale as an indicator of forgone success, when statistics as they pertain to venture capital demonstrate literally the opposite. So, what is an investor to do, whether they are a VC manager or a limited partner?

At Euclid, we are not in the business of macroeconomic predictions. We’re not qualified to—and question whether anyone really is capable—of timing market cycles or bubbles. In early 2016, Benchmark GP Bill Gurley—in our view one of the more level-headed venture commentators out there—wrote about the frothy valuation environment at the time in his “On the Road to Recap.” The ensuing “reset” wouldn’t occur until six years later.

Our key principles at Euclid remain unchanged. We believe that Vertical AI—both in terms of its focus on real-world applications and its relative under-reliance on overcapitalization to win—will remain a highly attractive theme for decades. Even without further meaningful improvements in foundational models, the installation phase of current gen LLMs across vertical markets represents a generational opportunity in itself.

We bet on founders solving real industry problems, drawing on their direct experience building, selling, or working in that vertical. With a sole focus on identifying and backing those founders from Day Zero, this leaves Euclid somewhat isolated from the downstream capital frenzy. Which in turn, gives us the space to remain focused on core investment principles: partnering with businesses with (near-zero) marginal cost, compounding advantages, and hopefully, lower CAC and higher defensibility at scale.

It is in times like these when we believe manager discipline is most essential, both in terms of valuations and investment pace. Beta hunting won’t work any better than bargain hunting. We’ll end where we started with a perspective from David Cahn that resonated with us:

A huge amount of economic value is going to be created by AI. Company builders focused on delivering value to end users will be rewarded handsomely. We are living through what has the potential to be a generation-defining technology wave.

Speculative frenzies are part of technology, and so they are not something to be afraid of. Those who remain level-headed through this moment have the chance to build extremely important companies.

Thanks for reading Euclid Insights! Additional sources here3. Euclid is a VC partnering with Vertical AI founders at inception. If anyone in your network is working on an idea in the space, we’d love to connect. Just drop us a line via DM or in the comments below.

https://epoch.ai/blog/open-models-report

David Cahn (Sequoia). AI’s $200B Question (2023) & AI’s $600B Question (2024)

Financial Times (2025)

‘Absolutely immense’: the companies on the hook for the $3tn AI building boom

Investors glimpse pay-off for Big Tech’s mammoth spending on AI arms race

WSJ (2025). Cutting-Edge AI Was Supposed to Get Cheaper. It’s More Expensive Than Ever

The Information (2025)

‘AI Native’ Apps’ $18.5 Billion Annualized Revenues Rebut MIT’s Skeptical Study

For Google Challenger Perplexity, Growth Comes at a High Cost

The Economist (2025). The $4trn accounting puzzle at the heart of the AI cloud

Newcomer (2025). Cursor’s Popularity Has Come at a Cost. GPT-5 May Have Arrived at the Right Time.

Vertical AI SaaS companies acting as LLM wrappers will be a the bridge to cross the AI capital cost trap by outputting monetizable per industry deliverables with a RL 🔄 RAG loop. Both LLMs and Customers will need the Vertical SaaS-AI, their distribution and their RL-RAG loop outputting new product deliverables in the middle. In effect the economy will become a RL Environment Machine (1)

Meaning..we gotta go vertical to monetize :)

1. Idea Threadings: Eli Dukes-Verticalized + Nic&Omar-Deus EX Capex