Enterprise AI adoption isn’t “failing” because the tech isn’t ready. It’s slow because most founders are still trying to ship it like horizontal SaaS — and the enterprise vertical AI playbook is fundamentally different. And while founders are figuring out the enterprise GTM motion for Vertical AI, big companies are still figuring out how to test, procure, and implement it. The net result is that — while we’ve seen more PLG-like Vertical AI dominate the “league tables” — enterprise Vertical AI represents a multi-trillion-dollar white space opportunity.

Everyone talks about about how the revenue bar has irreversibly elevated in the AI era. “You’re dead if you don’t hit $XM in your first 12 months.” In it’s first year, EvolutionIQ worked with a single design partner, brining in ~$50k total. A few years in, however — once they optimized the product and enterprise workflow — they hit hitting 8-figure ARR shortly not long, with a very different growth profile.

This episode breaks down how EvolutionIQ became one of the first true breakout Vertical AI exits — selling to CCC for $750M — by doing something unfashionable: deep, hands-on iteration with at-scale carriers until the product was undeniably compounding value.

Michael Saltzman, co-founder and co-CEO, joins us to walk through the unsexy blocking and tackling of those earliest days of EvolutionIQ. He unpacks the core of the problem they attacked in insurance: why claims was a massively underserved problem, how (in a world where revenue acceleration gets all the attention) cost savings on a massive base can be just as powerful, how they eschewed the classic approach of writing back into core systems (skirting the perennial integration problem) and built a “digital twin” of the data instead, and how they made results (not pitch decks) do the early selling.

He also shared a lesson important for every founder today: how to build a platform that can adapt as underlying technology improves quickly. EvolutionIQ began pre-LLMs but, thanks to their flexible architecture — and more importantly, thanks to their incredible early technical talent from Google — they adapted and assimilated without slowing down.

Michael’s insights in this episode are crucial for any Vertical AI founder selling a truly enterprise product, structuring deep big-company design partnerships, or grappling with the trades offs of product complexity and early AI revenue milestone pressures.

This was one of our favorites to date!

A Word from Our Partner: Parafin

Embedded finance for founders

Parafin allows founders to instantly launch a white-labeled fintech suite: capital, corporate cards, and bank accounts. They help your customers grow and unlock a high-margin revenue stream for your platform. Click below to learn more.

I) Vertical Titan

Michael Saltzman — Co-founder & Co-CEO @ EvolutionIQ

The backstory

Michael started as a mechanical engineer, then joined Bridgewater Associates where he spent years analyzing insurers and learning how they talk about their businesses — especially where the money actually goes.

At Stanford, he reconnected with a longtime friend, Tom, who’d spent more than a decade doing AI work at Google. They were drawn to industries with massive unstructured data — and insurance claims stood out as an “ugly stepchild” with massive potential: huge dollars, low product attention, and mountains of documents.

Their insight was simple: carriers were investing heavily in distribution and underwriting, while claims — where a huge share of revenue gets paid out — remained under-tooled. If you could turn unstructured claims data into consistent guidance, even small accuracy gains would compound at enterprise scale.

The hardest part

Selling “guidance” (not automation) into a workforce that already believes it has strong expertise — and earning trust from the chief claims officer all the way to the frontline adjuster.

Building an integration strategy that didn’t break core systems: every carrier’s data is different, so EvolutionIQ had to build an interface layer to normalize inputs across wildly inconsistent stacks.

Creating a deployment motion where product and engineering were deeply involved, not just customer success — because value only shows up when the tool actually changes day-to-day behavior.

Proving measurable impact quickly enough to unlock expansion: long sales cycles are real in insurance, so the first module needed to show results inside a year so it could “pay for” the next.

Memorable lines from Michael

On first design partner: “At worst we would waste $50,000 of their dollars — and at best we’d build something they couldn’t buy.”

“We didn’t write anything back to the CRMs at all.”

“We didn’t even try to sell it until we had results from the first customer.”

What EvolutionIQ actually sells

The product isn’t a robotic process replacement. It’s closer to AI augmentation for claims professionals — an application wholly separate from their core systems of record that analyzes incoming claims and guides adjusters toward the best next actions.

In the complex sub-space of bodily injury and disability claims (their beachhead), value often comes from better decisions and earlier interventions. So it wasn’t just a matter of doing the same work faster. They had to sell hard ROI: we can empower your claims function with better risk decisioning that will directly lower your expense ratio and hence increase profitability.

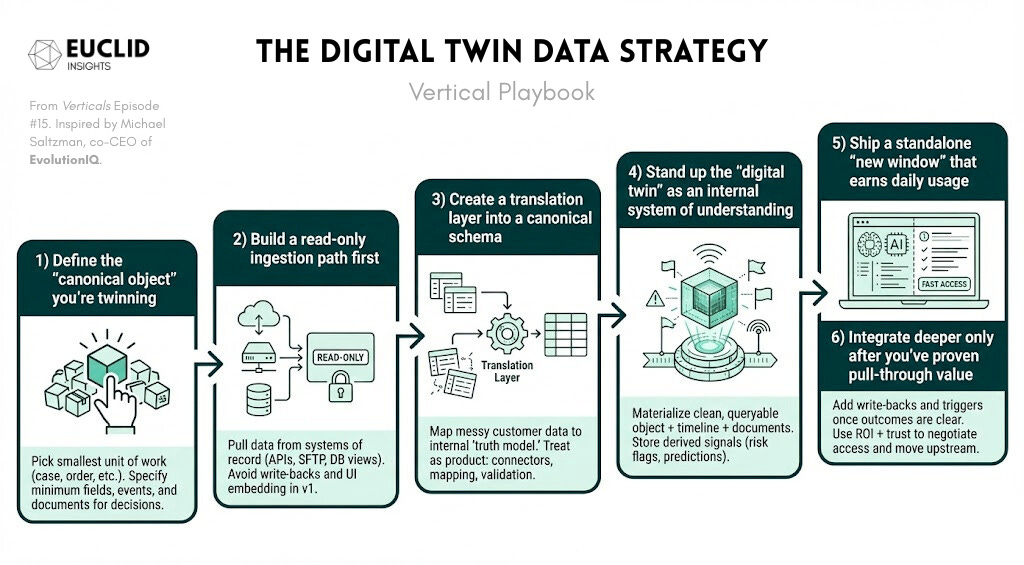

The “digital twin” integration strategy

Instead of trying to rip and replace carrier systems, EvolutionIQ built a digital twin of the carrier’s claims data in its own format.

They normalize inputs through an interface layer, run models against that normalized view, and avoid disruptive write-backs — which reduces implementation risk and makes scaling across many carriers technically feasible.

Time-to-value and expansion economics

Their north star wasn’t “implement everywhere.” It was “prove impact fast enough that the customer can expand based on results.”

They aimed to demonstrate measurable business outcomes within the first year, enabling expansion to be driven by real claim performance — not by selling harder — and creating a compounding “module pays for module” dynamic.

Getting customer one

Most prospects told them to come back when there was something real. Then they met an innovative carrier willing to co-build.

The first commercial relationship was essentially a small, bounded bet: a modest initial spend, a shared commitment to iterate, and an understanding that the product didn’t exist yet — the carrier wanted to build it with them.

Building moats in enterprise Vertical AI

A recurring theme was compounding advantage: the more deployments, the more robust the interface layer becomes, the more the product can improve across the stack, and the more deeply embedded it becomes in decision workflows.

In enterprise vertical AI, the moat isn’t just the model. It’s the combination of integration leverage, deployment trust, and a product that actually changes how the work gets done.

II) Vertical Playbook

The “Digital Twin” Approach to Vertical Data

Why it works

All too often, core-system integrations — especially ones reliant on sharp-elbowed, legacy incumbents — are where Vertical AI point-solution wedges face major headwinds. Not because they’re impossible to navigate (plenty do) but because they risk derailing your roadmap and momentum: “developer ecosystem” certifications, walled-garden API costs, IT queues, managing competitive concerns once revenue gets to $5-10M ARR.

The “digital twin” strategy takes a completely different approach. You rely on customer access to pull data out of core systems, normalize and enrich it, and deliver guidance in your own product surface without integration reliance. That lets you iterate fast, reduce reliance on slow incumbents, focus on ROI, and meanwhile, build a durable asset: a translation layer that learns how to interpret the “reality” of customer data regardless of the customer or SoR it came from.

The tradeoff, of course, is adoption risk. You’re asking users keep yet another window open — in markets with SaaS fatigue, this can create friction. The only way that works is if the product is good enough that they want it open.

How to run it (EvolutionIQ-inspired blueprint)

Define the “canonical object” you’re twinning

Pick the smallest unit of work you’ll improve (a case, order, ticket, claim, patient encounter, shipment, etc.).

Specify the minimum fields / events / documents you need to drive decisions and measure outcomes.

Build a read-only ingestion path first

Start by pulling data out of systems of record (APIs, exports, event streams, SFTP, DB views).

Avoid write-backs and UI embedding in v1 so you’re not blocked by IT queues, vendors, or release cycles.

Create a translation layer into a canonical schema

For each customer, map their messy tables / fields into your internal “truth model.”

Treat this like a product: reusable connectors, mapping tooling, validation tests, and observability.

Goal: make every customer “look the same” once data lands in your platform.

Stand up the “digital twin” as an internal system of understanding

Materialize a clean, queryable representation of the canonical object + timeline + documents.

Store the derived signals you compute (risk flags, next-best-actions, summaries, predictions).

This becomes your independent data asset and lets models + workflow logic stay portable.

Ship a standalone “new window” that earns daily usage

Build the workflow surface where your AI is actually useful: prioritization, guidance, triage, escalation, audits.

Design for “side-by-side” use: fast load, low clicks, obvious next action, etc.

Adoption hack: make it the fastest way to answer the question users ask 50x a day.

Integrate deeper only after you’ve proven pull-through value

Once outcomes are clear and usage is sticky, selectively add write-backs, embedded widgets, or workflow triggers.

Integrate where it reduces friction (SSO, deep links, task creation).

Use ROI + trust to negotiate access and move upstream in the workflow.

Founder litmus tests

Can you ship product changes without waiting on a core-system release cycle?

Do you have a clear canonical schema — or are you re-building the data model per customer?

If you never wrote back into the system of record, would your product still change daily behavior?

Is your “new window” compelling enough that frontline users will keep it open, even with swivel-chair friction?

Can you explain recommendations in a way that a claims leader can trust and defend?

What we debated on-air

When “being inside the core” is actually necessary — and when it’s just a default assumption from the industry.

How much of the moat is the model versus the translation layer and data semantics you accumulate over time.

The real adoption tradeoff of a standalone UI: friction is real, but so is the leverage of owning your product surface.

III) Vertical Market Pulse

Enterprise ROI proof points mount in insurance

Travelers announces AI commitment, 20% profit hike | Claire Wilkinson @ Business Insurance

A growing set of large carriers and insurance players are moving away from “innovation theater” to reporting measurable ROI from AI adoption: straight-through processing, AI-supported underwriting review, and even AI voice agents for first notice of loss.

Takeaway: For founders, the bar is rising. It’s no longer enough to demo a model — you need a deployment story that survives production constraints, workforce adoption, and ROI scrutiny inside a regulated enterprise.

Insurance AI case study: labor & efficiency

Allianz to cut up to 1,800 jobs due to AI | Alexander Huebner @ Reuters

We have argued in the past that — viewed holistically — AI will be a net creator of jobs. That said, in particular roles and in the short term, there will undoubtedly be disruption… and receptionists and call centers have been some of the earliest to feel measurable pressure from AI.

The ROI covered in the previous pulse have begun to have equally tangible impacts on enterprise customer service. In insurance, high-volume, process-heavy operations are universal, making it a natural place to see developments like the one Allianz announced recently. Of course, some such announcements will be marketing inspired; always important to keep in mind.

Takeaway: beyond the positive savings to the company and the negative implications for call center workers, we would call attention to to a larger point. Winning products won’t just be “automation” that reduces headcount. They’ll be the systems that augment. Unless an enterprise is admitting it’s cutting service levels, few heads means each head must be more efficient. Winning Vertical AI focused on support staff will make humans materially faster and more accurate at processing the low-level tasks quickly and materially better at considering the high-impact 20% of decisions.

Insurance AI fundraising heats up in 2H 2025

Insurance AI deal roundup | Euclid Insights

Recent major fundraises in US insurance AI highlight an embrace of AI for both back-office and risk. In 2H 2025, two deals that stood out to us were Nirvana Insurance ($100M led by Valor for AI-driven trucking underwriting) and Liberate Innovations ($50M led by Battery for insurance reasoning agents). Smaller but significant rounds include Retireable ($10M led by IA Capital for combining AI-augmented retirement planning) and Quandri ($12M led by Framework for brokerage “digital workers”).

Next week on Verticals

Join us next week on Verticals to tackle Multiplayer Vertical AI with Mike Powers, co-founder and CEO of BuildVision!